Week #17 5/20-5/27

- Ryan Smith

- May 27, 2024

- 5 min read

Updated: May 27, 2024

Ryan:

I spend this week working to transfer the OpenCV code from my laptop to the Raspberry Pi, and optimized it to run on the Pi. The first step was to download OpenCV to the Pi using the Apt-get command. Once installed, the version was checked using a print command directly in the terminal.

The next step was to execute the code on the Pi, and open multiple cameras simultaneously. This was accomplished by creating two instances of the cv2.VideoCapture command. Some troubleshooting was required as a variety of error messages were present when first running the code. Some troubleshooting lines were added to the code as a means to find out which cameras were not opening. I found that Camera 2 would not open, and after switching inputs Camera 1 would not open. Troubleshooting this issue lead me to a Raspberry Pi guide page regarding camera software [14].

I first gathered a list of all of the video devices that are currently available on the Pi using the ls command. Once I noted that both video 0, and 1 were active, I set the cameras to videos 0, and 1 respectively. This did not remedy the issue and further troubleshooting was needed.

Running the V4l2 command showed that both cameras were active and working, however when referencing Video 0 and 1 in the code it was calling to open Camera 2 twice. The cameras were set to videos 0 and 2 respectively and both cameras opened on execution of the code.

The code from the pervious week that was written to run the door opening sequence was added to the new OpenCV code and tested. If a dog is detected the Open Sequence is then ran. This was testing using a multimeter and it was found to have 3.3V on pin 17 when the bounding box appeared.

Code:

import cv2from gpiozero import Motorfrom time import sleepimport os# Assign pins 17 and 27 for forward and backward rotationdoormotor = Motor(17, 27)def rollup(): # This function rolls up the door doormotor.forward() sleep(2) doormotor.stop()def rolldown(): # This function rolls down the door doormotor.backward() sleep(2) doormotor.stop()def opensequence(): # This function initiates the open sequence: roll up, wait, then roll down rollup() sleep(5) rolldown()# load the cascade file from the Pi desktopcascade_path = '/home/raspberrypi/Desktop/Haar Training/cascade2xml/myfacedetector.xml'cascade_file = cv2.CascadeClassifier(cascade_path)# function to detect the dogs facedef dog_detection(img): # convert image to greyscale gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # reference the cascade file to detect images with similar features dog_face = cascade_file.detectMultiScale(gray, scaleFactor=1.1, minNeighbors=5, minSize=(50, 50)) # create a bounding box around the dog in the image for (x, y, w, h) in dog_face: cv2.rectangle(img, (x, y), (x+w, y+h), (255, 0, 0), 5) return img, len(dog_face)# start video capturecam1 = cv2.VideoCapture(0) # Camera 1cam2 = cv2.VideoCapture(2) # Camera 2if not cam1.isOpened(): print("Cannot open camera 1") exit()if not cam2.isOpened(): print("Cannot open camera 2") exit()while cam1.isOpened() and cam2.isOpened(): # allow OpenCV to read frames and determine a true of false reading ret1, frame1 = cam1.read() ret2, frame2 = cam2.read() #if read function not working return error if not ret1 or not ret2: print("Failed to capture frames") break # run dog detection function for both cameras frame1_with_dogs, num_dogs1 = dog_detection(frame1) frame2_with_dogs, num_dogs2 = dog_detection(frame2) # if a dog is detected in each camera run open sequence if num_dogs1 > 0 or num_dogs2 > 0: opensequence() # show camera feeds on screen for troubleshooting purposes cv2.imshow('Dog Detection Test - Camera 1', frame1_with_dogs) cv2.imshow('Dog Detection Test - Camera 2', frame2_with_dogs) # close function and stop code when q key is pressed key = cv2.waitKey(1) & 0xFF if key == ord('q'): break# close camera video capture windows and end functioncam1.release()cam2.release()cv2.destroyAllWindows()Nick

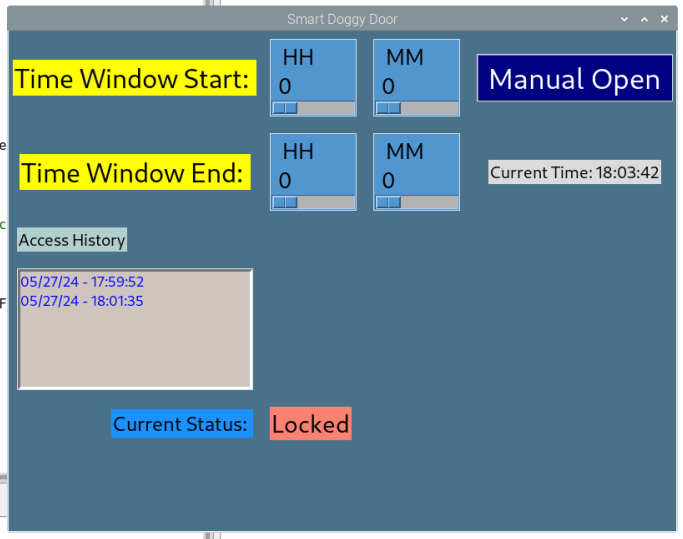

Wrote the code for the GUI using Tkinter to include the interface element agreed on during the proposal phase. The interface includes 4 sliders to control the time window, a list showing the access timestamps, the manual button, and the clock.

Ryan's code for OpenCV was adjusted in order to be integrated into the main program successfully. The timestamp feature was added to the code.

At the moment, the time-window feature is still under development.

For testing purpose, the code was set up to only run on a single camera and a human face reference cascade file was provided.

Code:

import cv2from gpiozero import Motorfrom time import sleepfrom tkinter import *from tkinter import Tk, fontimport datetimeimport timedoormotor = Motor(24,23)def rollup(): doormotor.forward() sleep(7) doormotor.stop()def rolldown(): doormotor.backward() sleep(7) doormotor.stop()def opensequence(): log_list.insert(END, datetime.datetime.now().strftime("%D - %H:%M:%S")) rollup() sleep(2) rolldown()cascade_path = '/home/nick/Desktop/haarcascade_frontalface_default.xml'cascade_file = cv2.CascadeClassifier(cascade_path)def dog_detection(img): gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) dog_face = cascade_file.detectMultiScale(gray, scaleFactor=1.1, minNeighbors=5, minSize=(50, 50)) return img, len(dog_face)cam1 = cv2.VideoCapture(0)cam1.set(cv2.CAP_PROP_FRAME_WIDTH, 640)cam1.set(cv2.CAP_PROP_FRAME_HEIGHT, 360)def scan(): ret1, frame1 = cam1.read() frame1_with_dogs, num_dogs1 = dog_detection(frame1) if num_dogs1 > 0: opensequence()main_window = Tk()main_window.configure(background = "SkyBlue4")main_window.geometry("800x600")main_window.attributes("-fullscreen", False)main_window.title("Smart Doggy Door")main_window.option_add( "*font", "SegoeUI 24" )status=str("Locked")tw_start = Label(main_window, bg = 'yellow', text = 'Time Window Start: ')tw_start.grid(row=0, column=0)tw_end = Label(main_window, bg = 'yellow', text = 'Time Window End: ')tw_end.grid(row=1, column=0)tw_start_hour_slider = Scale(main_window, label = "HH", orient=HORIZONTAL, from_=0, to=23, background= 'SteelBlue3', font = ('SegoeUI', 20))tw_start_hour_slider.grid(row=0, column=1, sticky=W, padx = 10, pady = 10)tw_start_minute_slider = Scale(main_window, label = "MM", orient=HORIZONTAL, from_=0, to=59, background= 'SteelBlue3', font = ('SegoeUI', 20))tw_start_minute_slider.grid(row=0, column=2, sticky=W, padx = 10, pady = 10)tw_end_hour_slider = Scale(main_window, label = "HH", orient=HORIZONTAL, from_=0, to=23, background= 'SteelBlue3', font = ('SegoeUI', 20))tw_end_hour_slider.grid(row=1, column=1, sticky=W, padx = 10, pady = 10)tw_end_minute_slider = Scale(main_window, label = "MM", orient=HORIZONTAL, from_=0, to=59, background= 'SteelBlue3', font = ('SegoeUI', 20))tw_end_minute_slider.grid(row=1, column=2, sticky=W, padx = 10, pady = 10)manual_button = Button(main_window, text = "Manual Open", bg = "Navy" , fg = "White", command=opensequence, font = ('SegoeUI', 26))manual_button.grid(row = 0, column = 3,padx = 10, pady = 10)log_list = Listbox(main_window, fg = 'blue', bg = 'SeaShell3', width = 25, height = 6, borderwidth = 3, font = ('SegoeUI', 13))log_list.grid(row = 4, column = 0, padx=10, pady=10)history_label = Label(main_window, bg= 'LightCyan3', text = 'Access History', font = ('SegoeUI', 15))history_label.grid(row = 3, column = 0, padx = 10, pady=10, sticky = W)status_label = Label(main_window,bg = 'DodgerBlue', text = 'Current Status: ', font = ('SegoeUI', 18))status_label.grid(row=5, column=0, padx = 10, pady=10, sticky = E)current_status_label = Label(main_window, bg = 'Salmon', text = status, font = ('SegoeUI', 22))current_status_label.grid(row=5, column=1, padx=10, pady=10, sticky = W)current_time = Label(main_window, font = ('SegoeUI', 15))current_time.grid(row=1, column=3, padx=10, pady=10)def clock(): time = datetime.datetime.now().strftime("Current Time: %H:%M:%S") current_time.config(text=time) scan() main_window.after(1000, clock)clock()mainloop()